AWS Keys + Github = Disaster (Don't Be Like Me)

This blog highlights how simple and easy it is to make an honest mistake that can have serious ramifications. Thankfully, my scenario did not end in a breach of customer data being exposed. And before you ask, my employers production environments were NEVER put at risk in any form. The environment detailed in this blog is a test environment isolated from the rest of my employers assets and workloads in the Public Cloud.

I am referencing a few articles, studies and subsequent output from each throughout the article. I believe I referenced accordingly but just in case. I will list the reference locations at the beginning of the article.

Uber Breach - AWS Insider (Gladys Rama)

ZDNet - NCSU Github Keys Study (Catalin Cimpanu)

A personal story

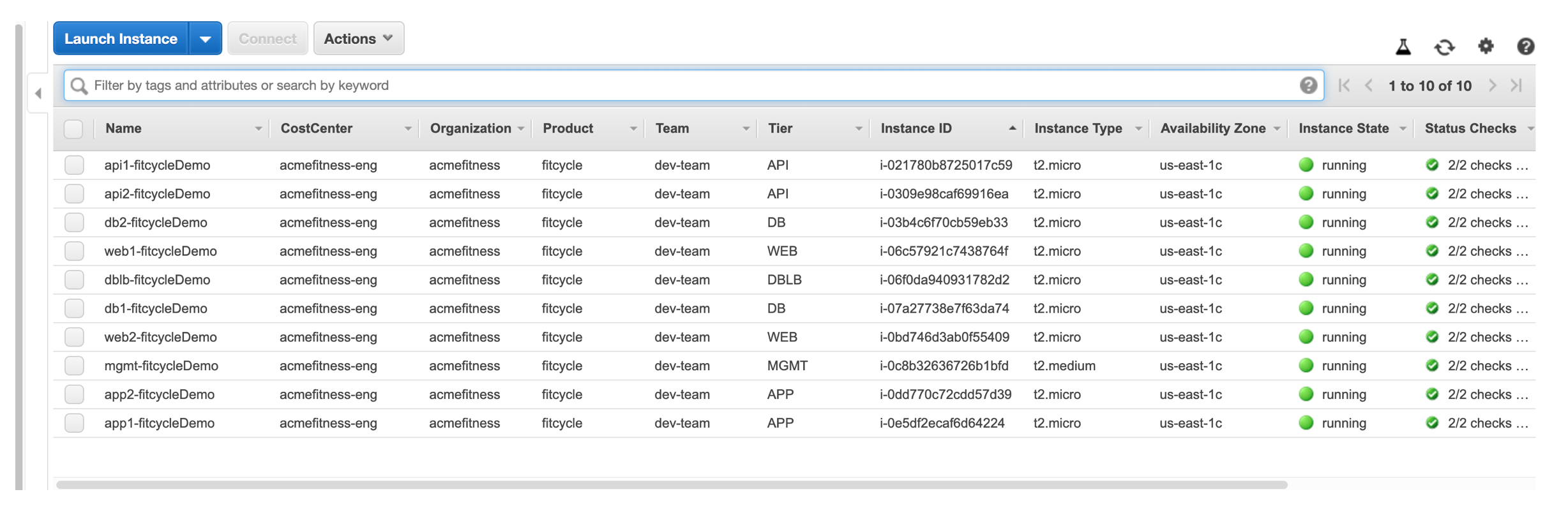

Our team build an application a few years ago called Fit Cycle. A simple 3-tier application with app servers, db servers, web front ends and a few load balancers scattered throughout. This application was mean to demonstrate the power of our solutions as customer began to consume the Public Cloud. In this example, we were working with AWS. Our team was small and we divided up tasks evenly. Some worked on the application bits and I focused most of my time on the automation and cloud services that the application would reside. We build everything and we could get the application running with a combination of Terraform, Ansible, a few manual steps.

The next phase of the project was to ensure the entire application could be spun up and torn down within the friendly confines of a CI/CD Pipeline. For this, we farmed out some of the final work to one of our engineers. The engineer build a fantastic Jenkins pipeline that provisioned the infrastructure, application bits and configured accordingly.

100% Complete and Fully Automated

This was big for us but the engineer did not have access to our team Github repository. So they create a TAR or TGZ file and shipped it over to me. As instructed, I decompressed and uploaded the bits to a new Public Github Repository.

Disaster Strikes

There was a minor issue with the code that we uploaded to a Public Repository on Github…

AWS Access Key and Secret Access Key

Yes, shared keys were uploaded to a Public Github repository.

This happens all the time. This blog will focus on realities of user error in the Public Cloud and a few methods to help us prevent or remedy an “AWS Keys on Public Repository” scenario.

Is it the Cloud Providers fault? Or Users fault?

According to Gartner,

“Through 2022, at least 95% of cloud security failures will be the customer’s fault”

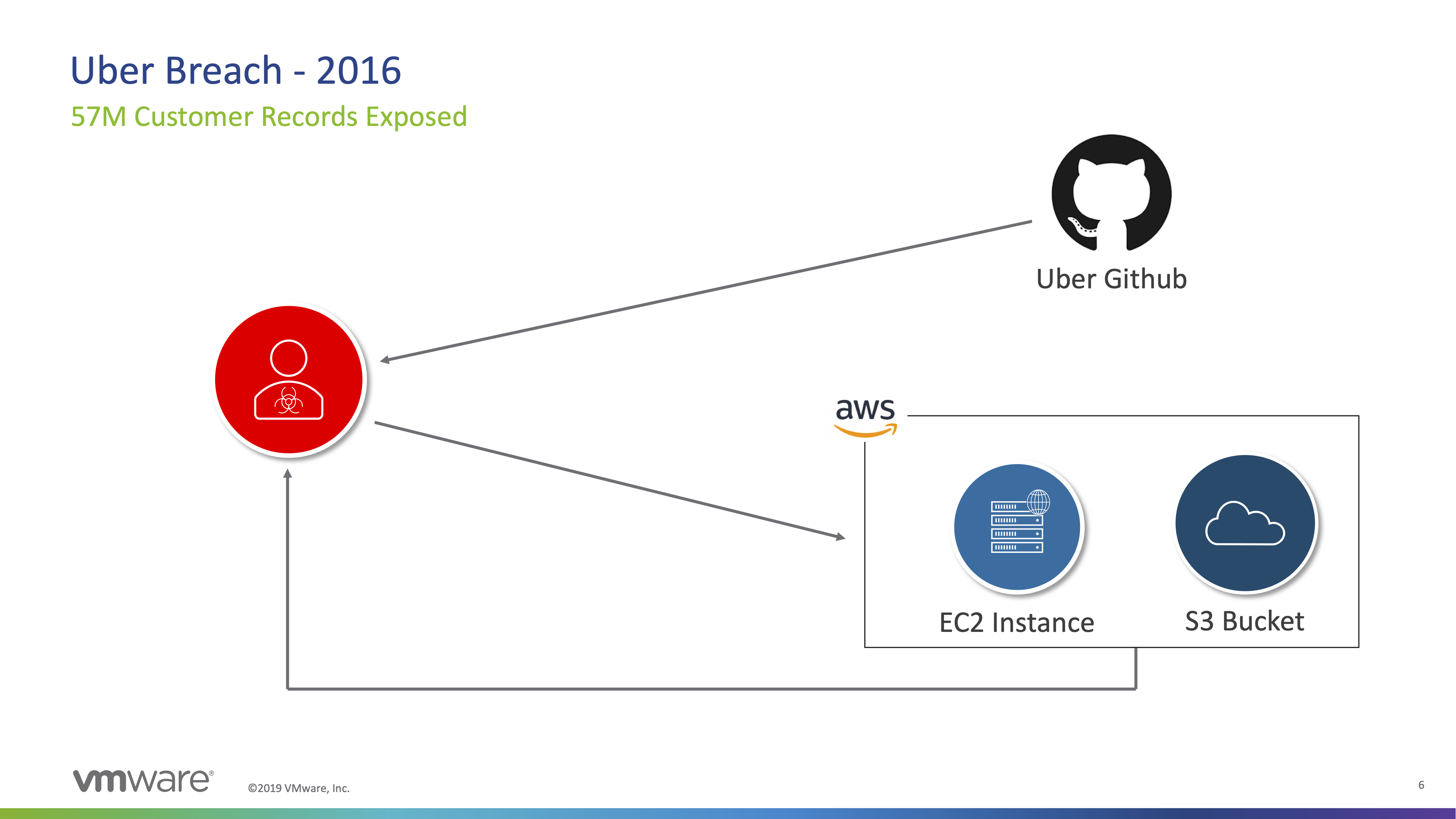

The Uber breach from 2016 involved over 57M Customer Records exposed due to AWS Keys being accessible via a Github repository. The big difference between my mistake and the Uber issues is the accessibility of customer data or intellectual property.. Thankfully I only have access to controlled test environments! AWS Insider

No person or organization is immune to human error.

Checkout the article from Catalin Cimpanu from this past March:

Over 100,000 GitHub repos have leaked API or cryptographic keys

Catalin utilizes the data from a North Carolina State University (NCSU) study and the results were shared with Github.

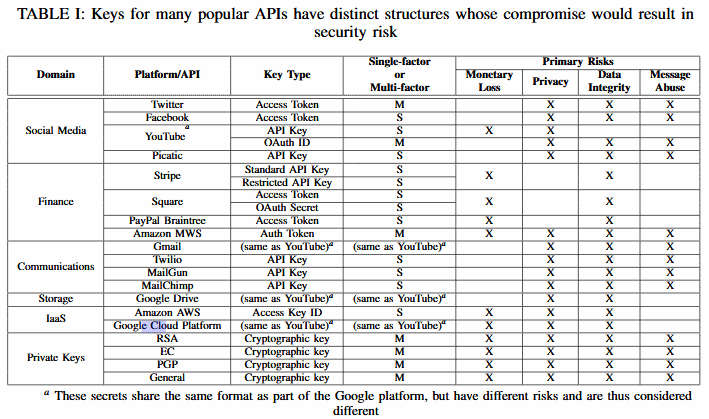

The first area that is most interesting to me about this study is focused on the formats of API keys utilized by major technology companies and their related security risk. Yes, it was easier to identify a key for one platform based upon the format, structure and overall uniqueness.

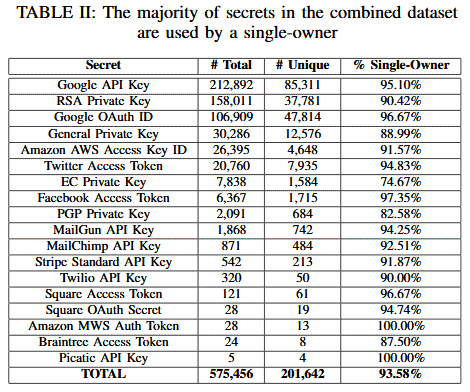

According to the study by the NCSU team, over 500 thousand API and cryptography keys, of which some 200 thousand were unique, were spread across 100,000 github projects.

Between Google Cloud and Amazon Web Services, there are plenty of examples regarding human error or mistakes. Yes, I am counted as one of the users who made a mistake.

Security Control Challenges

VMware is producing over 500 thousand containers and 150 thousand Virtual Machines on a daily basis. The following describes the “challenges” of Security Control in a dynamic environment like VMware.

- Constantly Changing Environment

- Slow Reporting

- Risk Prioritization

On the surface, this may seem a bit daunting and difficult to overcome. Security in the Public Cloud has forced VMware IT to adapt and evolve from historical methods.

Secure by Default

- Configuration Visibility

- Log Collection

- Threat Intelligence

- Activity Monitoring

The above methods for mitigating risk could each contain their own blog! Without going into great detail, each of the above steps (among others) are taken each and every day within VMware IT to ensure security of our applications in the Public Cloud.

Automation

DevOps, CI/CD Pipelines and Automation are big topics these days. Like any organization VMware has embraced these challenges and continues to improve on process and tooling on a daily basis.

Within the Automation methodology, VMware IT put a focus on a few specific areas.

- Guardrails

- Change Management

- Security Orchestration Automation and Response (SOAR)

I wont go into the specific details but below are a few methodologies and tools that VMware IT has embraced to overcome the challenges of Security in the Public Cloud.

Continuous Verification

The CloudJourney team has been pontificating the idea of Continuous Verification for some time. Our article on TheNewStack from July titled “Continuous Verification: The Missing Link to Fully Automate Your Pipeline” highlights the challenges and shortcomings of “automation” within most organizations.

To help overcome the prioritization and slow reporting, VMware IT has begun to implement DevSecOps or “Security in the Pipeline” as I like to call it.

Tooling

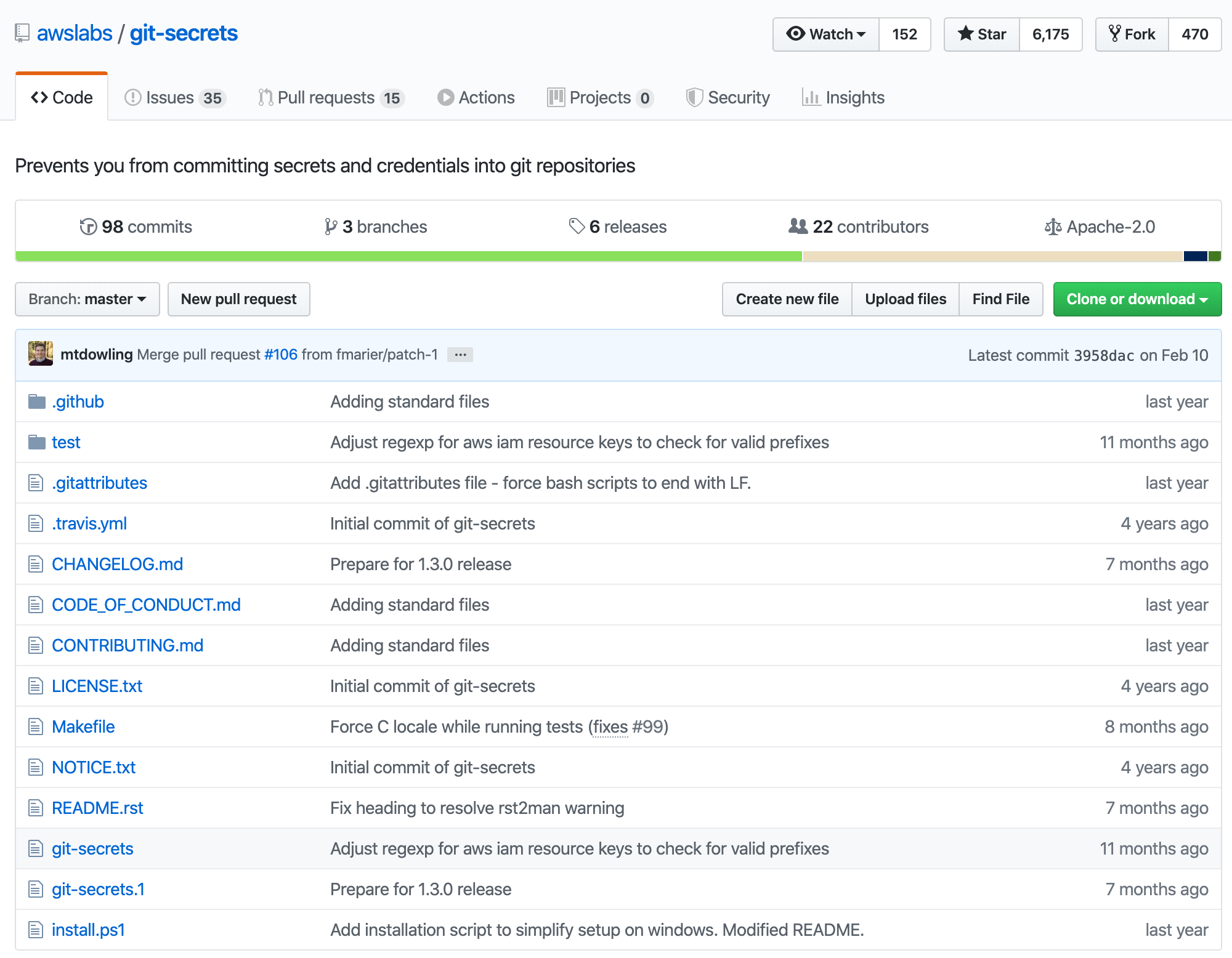

git-secrets

One of the tools I wish I had in place when I uploaded my peers AWS Keys to Github is git-secrets.

git-secrets is a fantastic solution and the README gets right to the point:

Prevents you from committing passwords and other sensitive information to a git repository.

I always replace ‘you’ with Sean!

Description

git-secrets scans commits, commit messages, and –no-ff merges to prevent adding secrets into your git repositories. If a commit, commit message, or any commit in a –no-ff merge history matches one of your configured prohibited regular expression patterns, then the commit is rejected.

Synopsis

git secrets --scan [-r|--recursive] [--cached] [--no-index] [--untracked] [<files>...]

git secrets --scan-history

git secrets --install [-f|--force] [<target-directory>]

git secrets --list [--global]

git secrets --add [-a|--allowed] [-l|--literal] [--global] <pattern>

git secrets --add-provider [--global] <command> [arguments...]

git secrets --register-aws [--global]

git secrets --aws-provider [<credentials-file>]

Big shoutout to Michael Dowling for his work on this project. I strongly recommend the use of git-secrets to ensure you don’t end up becoming and example like me!

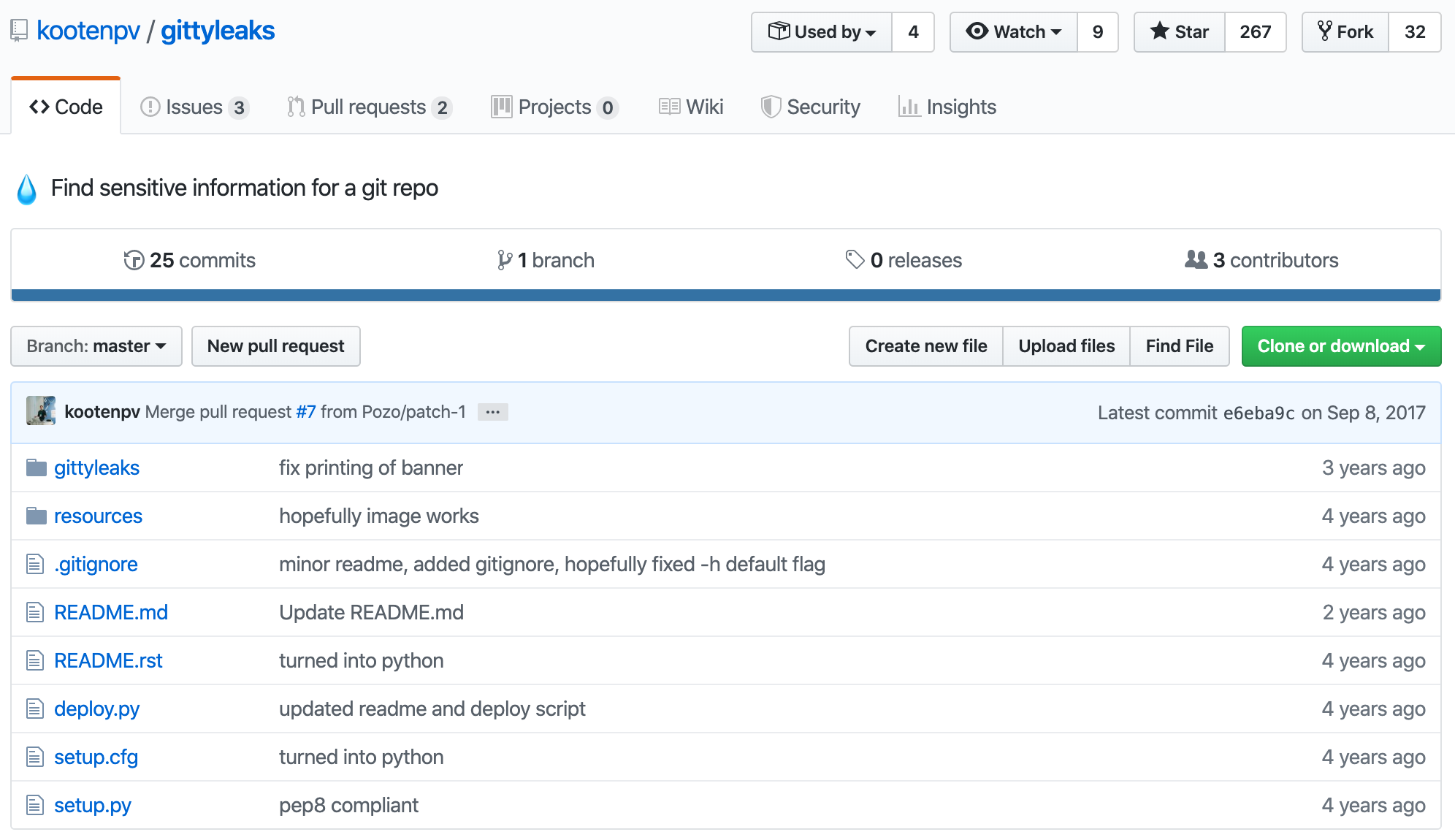

gittyleaks

Another fantastic tool that you should add to your bag of tricks is gittyleaks*. *gittyleaks* is a solution to run inside of your repositories to detect where sensitive data has been leaked. I suggest running this before a *git push* into a remote repository, especially a public repository.

The README states the following:

Use this tool to detect where the mistakes are in your repos. It works by trying to find words like ‘username’, ‘password’, and ‘email’ and shortenings in quoted strings, config style or JSON format. It captures the value assigned to it (after meeting some conditions) for further work.

Command Usage

gittyleaks # default "smart" filter

gittyleaks --find-anything # find anything remotely suspicious

gittyleaks --excluding $ . [ example , # exclude some string matches (e.g. `$` occurs)

gittyleaks --case-sensitive # set it to be strict about case

The contributors behind gittyleaks are also preparing to update the code to remedy issues based upon Github recommendations for sensitive data. You can find out more about the Github guidance here.

VMware Tooling

VMware Secure State

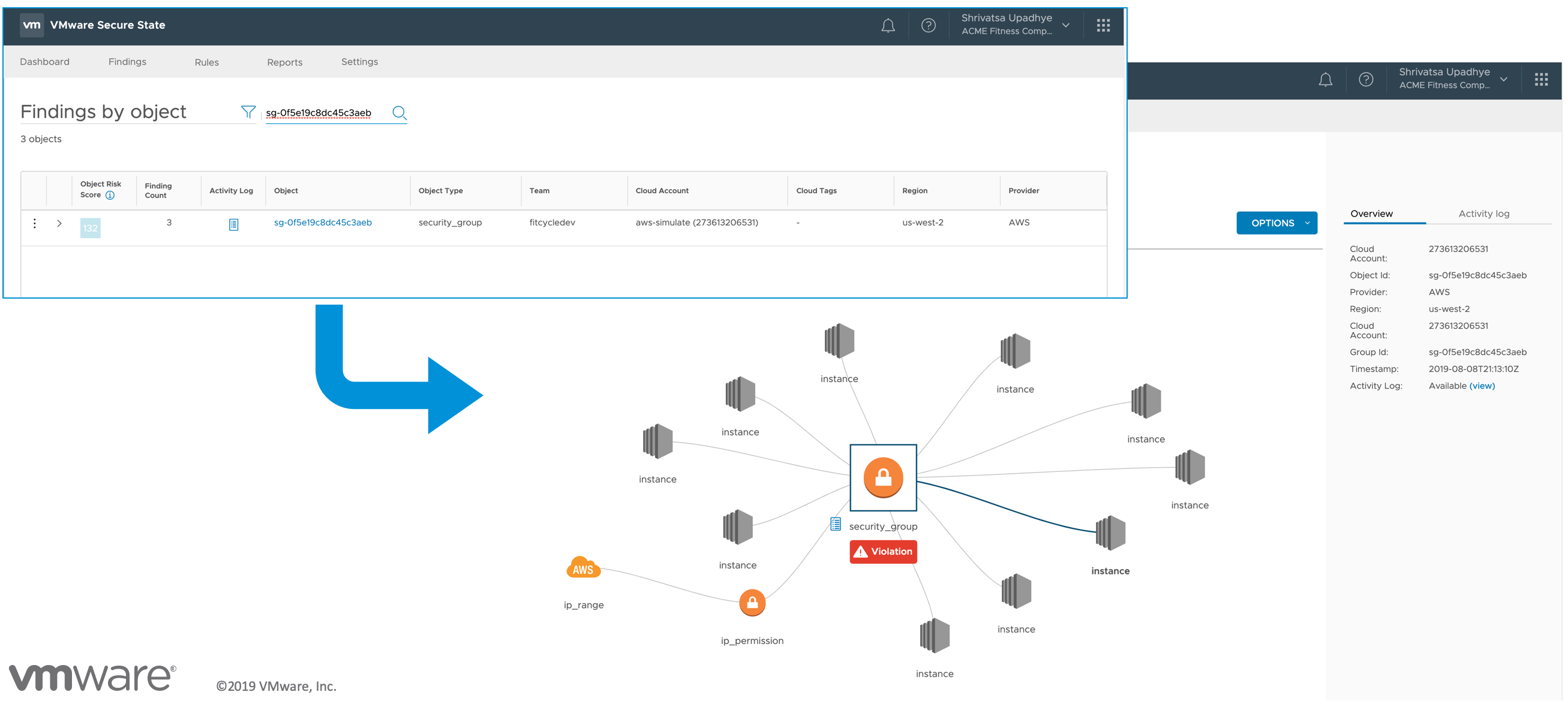

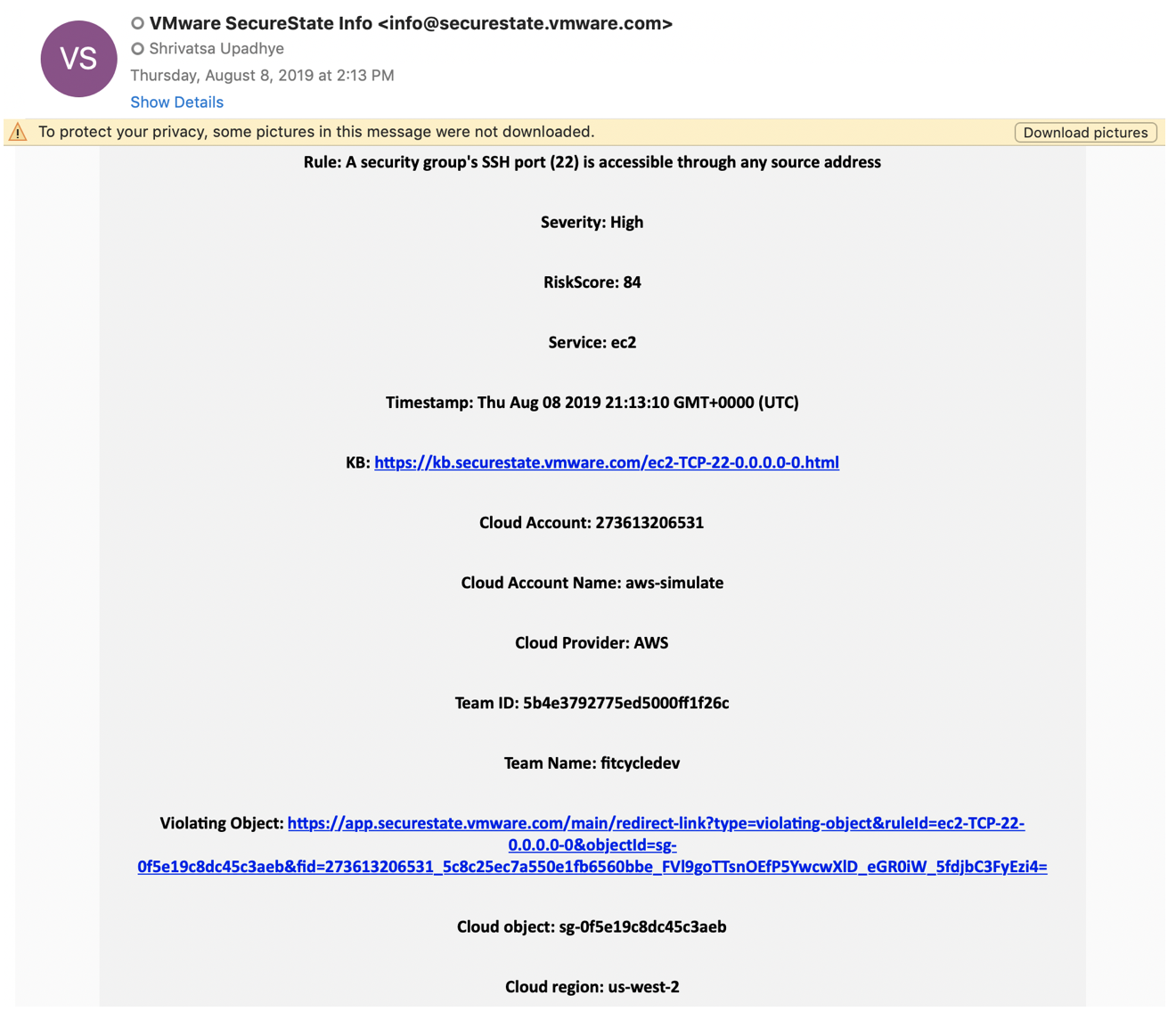

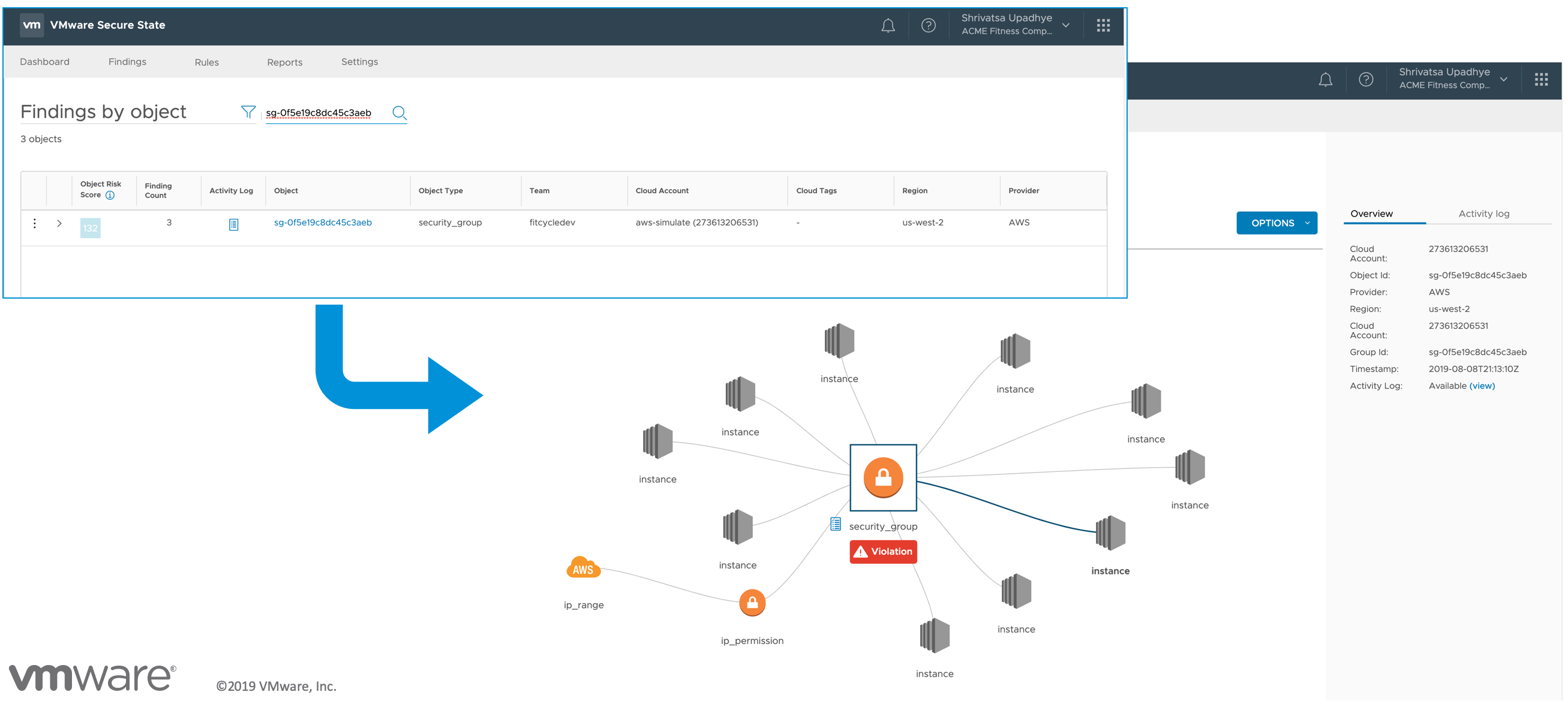

As mentioned before, VMware IT firmly believes in Activity Monitoring and VMware Secure State provides near Real-Time analysis of objects within your AWS and Azure environments. Below are a few examples of how VMware IT utilizes VMware Secure State to acknowledge and remedy those risks.

Take for example; my mistake, which involved me uploading AWS Keys to Github and how VMware Secure State can be used to alert and remedy it. I am not stating that VMware Secure State can show you when AWS Keys are uploaded to Github but it can help highlight misconfigurations in configuration which can alleviate similar activities as seen in other events. In the following example, I will look at an application that has a misconfigured Firewall configuration.

Breach Alert

The above screenshot highlights the deployed resource or objects with AWS EC2 console. This application represents a traditional 3-tier application with Web, App and Database servers plus a few load balancers spread across the stack.

The screenshot highlights an email alert from VMware Secure State with some very important details.

- Rule - A Security Group’s SSH Port (22) is Accessible through any Source Address

- Severity - High

- Risk Score

- Service - EC2

- Timestamp

- KB Article -

- Cloud Account

Along with a few other details that are pertinent to specific environments.

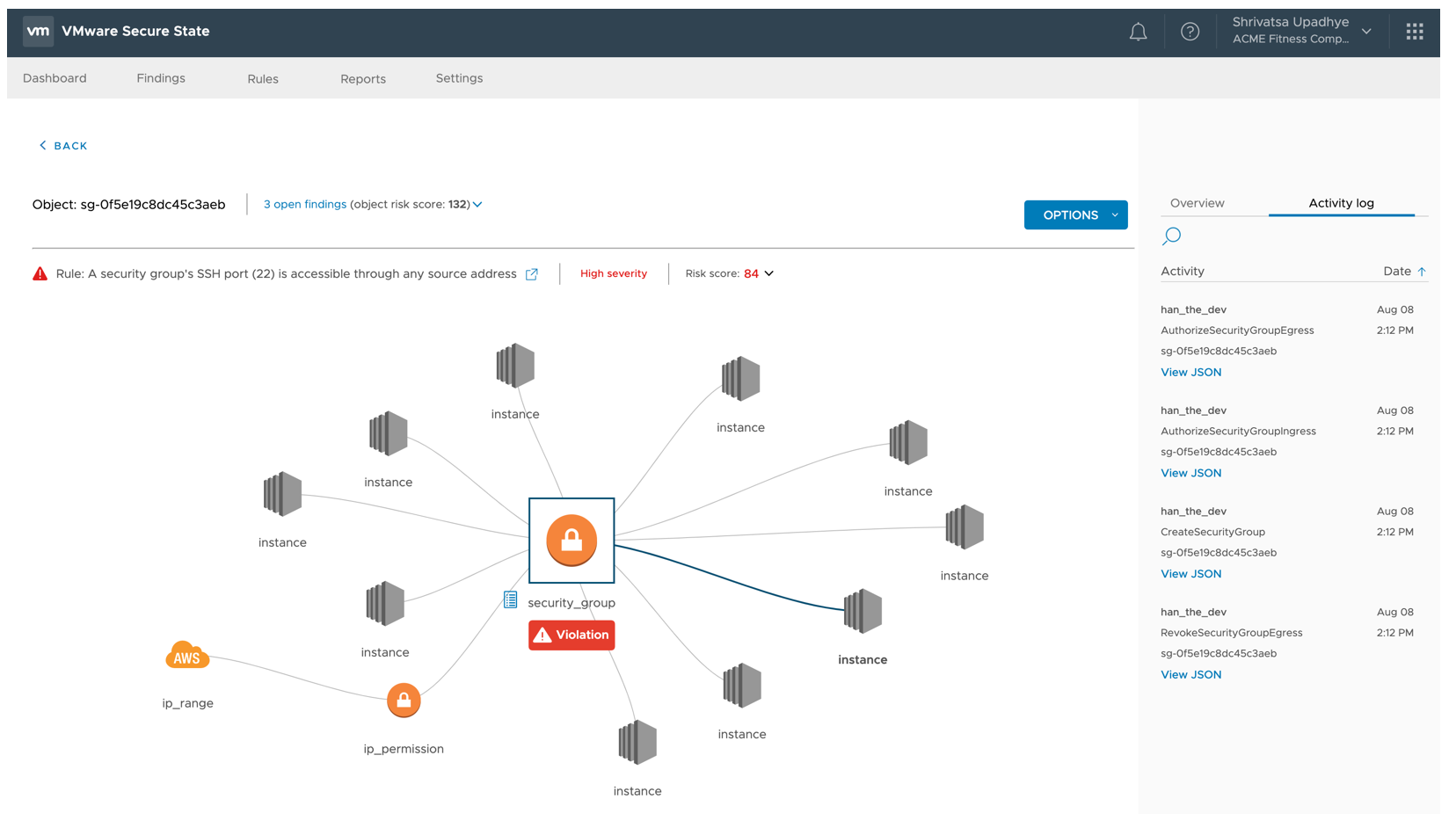

Notice how VMware Secure State allows for selection of the Security Group in violation along with each of the associated objects including EC2 Instances, IP Range and IP Permissions within a single view.

VMware Secure State highlights the associated activity related to the Security Group within the same screen to provide additional context.

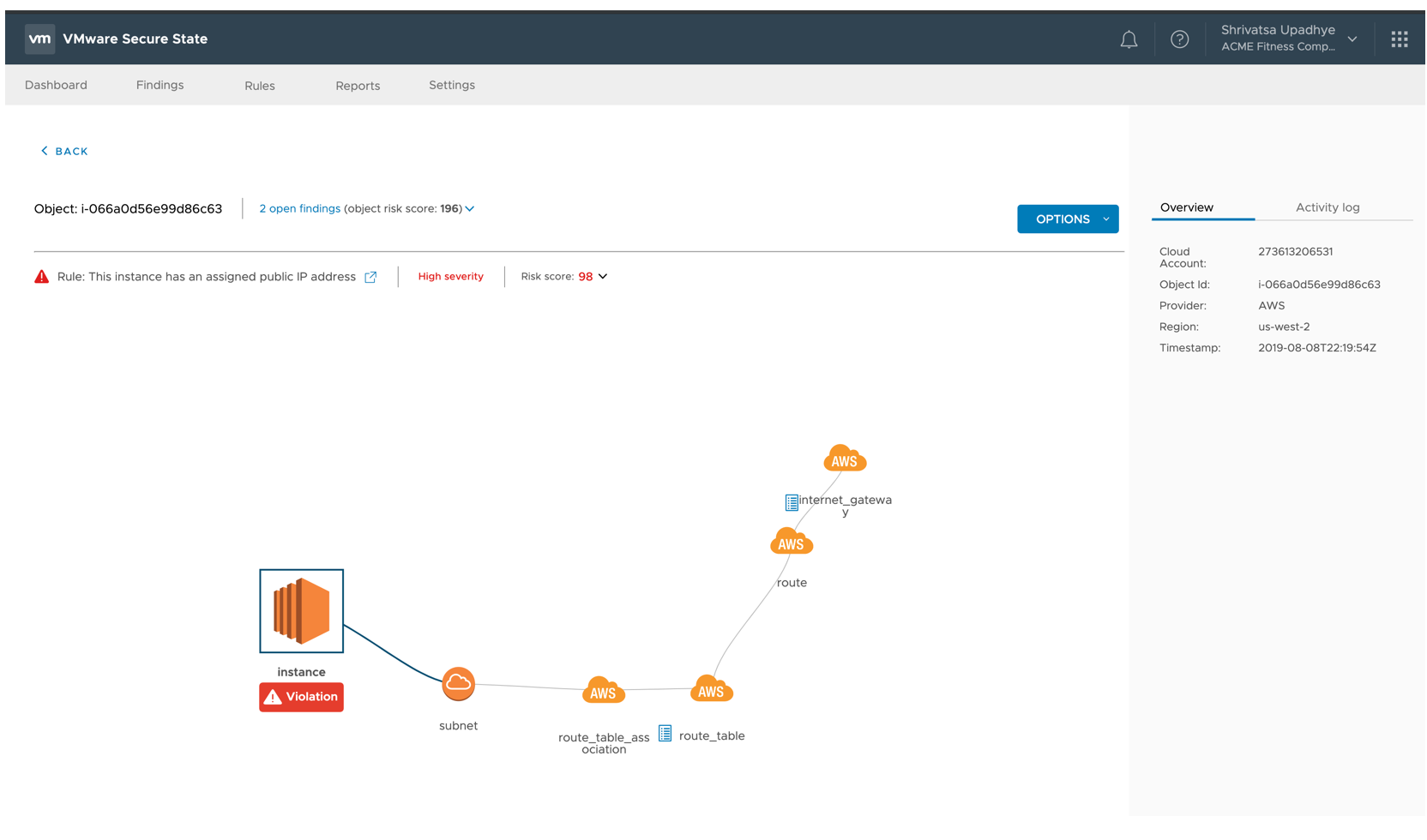

This last screen completes the picture and highlights that this specific EC2 instance is Publicly Addressable and therefore accessible.

As stated before, VMware IT and many other organizations are utilizing solutions like VMware Secure State in the pipeline to ensure these types of misconfigurations are halted during the deployment of the application.

Take a look at some of the other blogs the CloudJourney IO team has written regarding VMware Secure State.

How to detect and prevent data breaches in AWS?

Relationships and Connected Threats in the Public Cloud

CIS Benchmarks for AWS with CloudHealth and VMware Secure State

CloudHealth by VMware

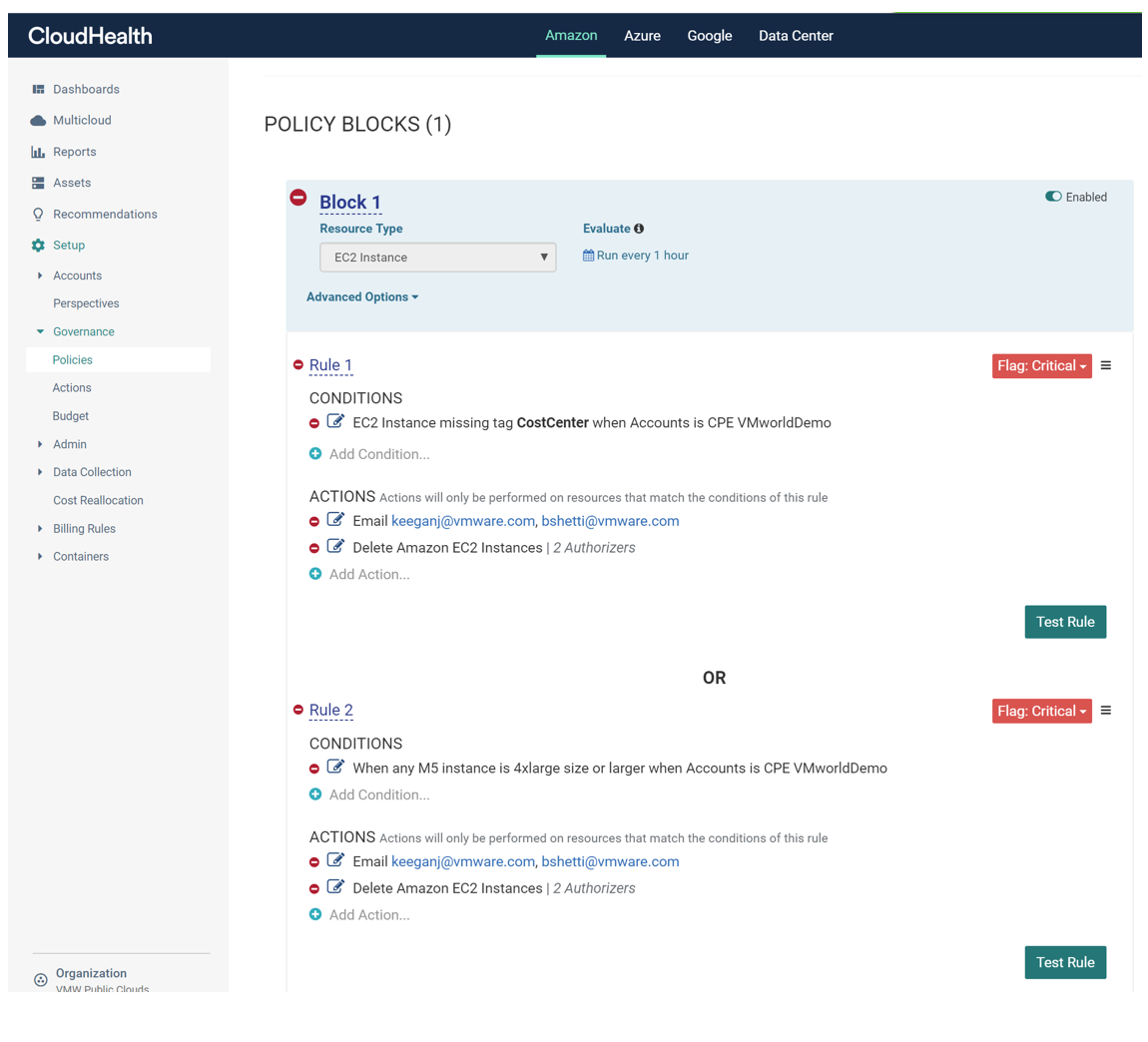

CloudHealth by VMware provides far more that Cost Management, Rightsizing, Budget reclamation and many other cost saving benefits. The second phase of consumption within the CloudHealth platform is Security and Governance. Going back to my example about uploading my peers AWS Keys to Github and lets look at a few way CloudHealth by VMware can be used to remedy the effects my mistake.

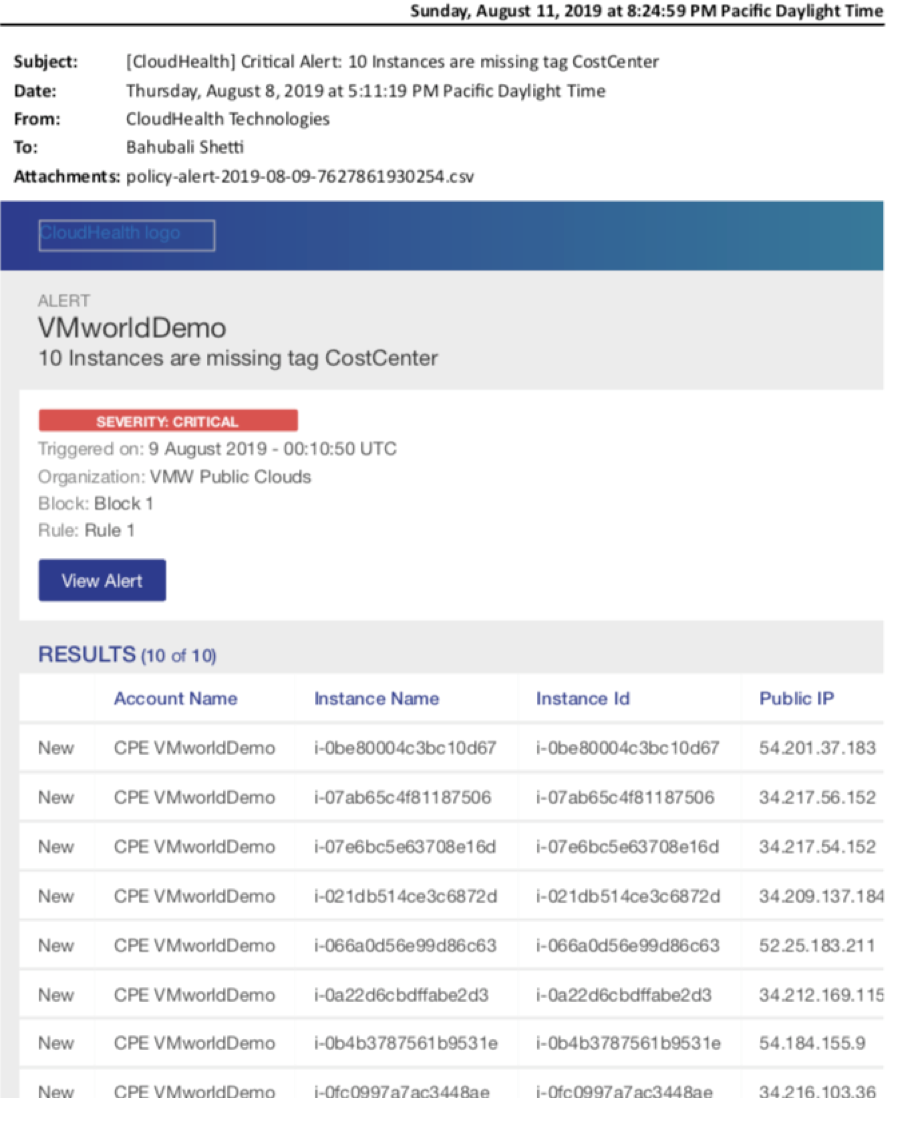

Detect Abnormal Instances

The policy above highlights a few rules to ensure that Abnormal usage is notified accordingly.

Rule 1

- Condition - EC2 Instance missing tag CostCenter

- Action 1 - Notify via Email

- Action 2 - Delete EC2 Instance

This rule focuses on objects deployed that do not follow organizational process and best practice. Going back to my scenario, CloudHealth would of recognized the EC2 Instances deployed as a result of my Keys being published and subsequently utilizing based upon the lag of tags used during the creation process. The idea of Continuous Verification would require all objects that are being provisioned in the Pipeline to include specific tags. In this example, a CostCenter tag.

Rule 2

- Condition - EC2 Instance larger than 4xLarge

- Action 1 - Notify via Email

- Action - Delete EC2 Instances

While these rules are reactive, this does mitigate some risk regarding object creation and subsequent expense related to a break.

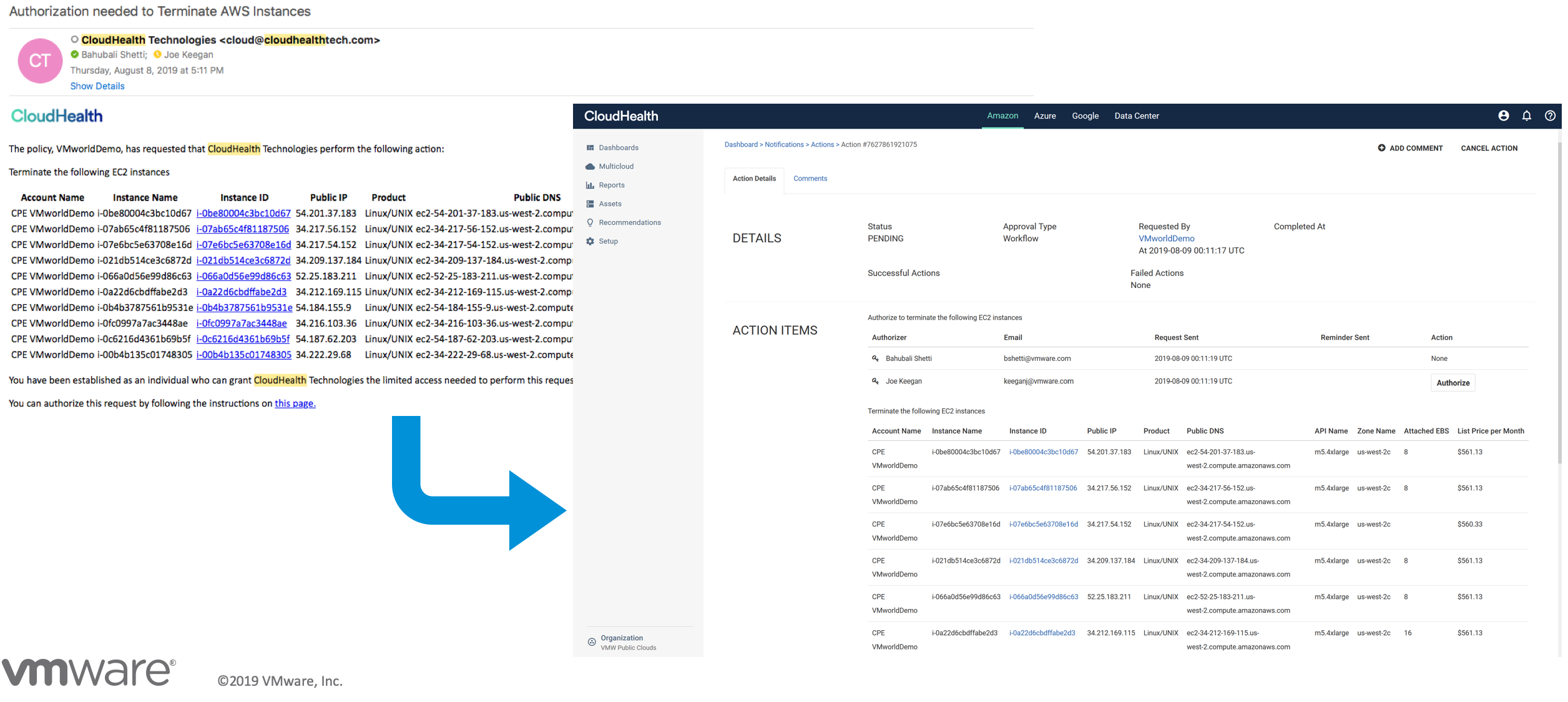

Notification and Deletion

Notice the email will alert the user and provide relevant context regarding which EC2 instances were created and subsequently deleted.

This combined screenshot highlights no only the email request to delete the objects but also the actions taken within CloudHealth to Authorize the deletion.

This scenario was setup to require manually authorization but CloudHealth by VMware could have automatically removed the offending objects.

Conclusion

Every time I talk about or think about what I have done regarding AWS Keys and Github, it still hurts! That being said, I have learned from my mistake and I am excited about the Open Source, 3rd Party and VMware solutions available to help overcome human errors as described above.

While I chose not to go down the path in this blog, VMware IT combines VMware Secure State and CloudHealth by VMware to determine the Total Cost of Incident by combining the data between the solutions to provide a clear picture of impact.

Each of the methods, tools and solutions mentioned in this blog are part of the broader strategy and plan. This provides a glimpse of what can be done in either a preventative or reactive fashion. Security has never been “easy” but lets make sure we don’t put the blame on the Public Cloud when it was our fault (or my fault) to begin with.