How to detect and prevent data breaches in AWS?

Cloud security breaches have dominated the news headlines over the last several years. What’s surprising is that almost every one of these breaches was due to a simple cloud setting that were not properly configured. The number of policies, roles and interconnections between various objects (EC2, S3, RDS, etc) configured by different teams across different clouds is hard to manage. However, with over a billion customer accounts and data records already exposed over the internet, it’s time we step back to assess the vulnerabilities we are dealing with and rethink our approach to ensure a cloud posture is secure.

Misconfigurations essentially lead to two main security threats -

-

Data breaches - There are numerous articles on the internet of many enterprises losing data due to misconfiguration of some security policy on AWS, Azure or GCP. In may cases the data that is exfiltrated is customer data, generally ranging in the millions of lost customer records. These are well publicized not only because of the size of the exfiltration, but also because these breaches have impacted well known, large-scale enterprises. However, there are many small to mid range companies that are regularly losing their data. These smaller incidents go un-reported and also stem from the same issues larger companies face. These range from S3 bucket misconfiguration, unencrypted backups, to more difficult to spot firewall configuration issues or open access policies that allow you to elevate privileges from a machine to a variety of data sources.

-

Compute-jacking - In this scenario, criminals obtain security credentials that are left open, such as Git, or open storage locations. The security credentials are used to hack into AWS/Azure/GCP accounts and spin up machines for various purposes. Bit-coin mining being one. It’s a “Free” resource for them. While most hyper-scalers don’t admit it, there are significant number of users that “accidentally” place their hyper-scaler credentials on Github. Based on a NSCU study 200k unique cryptography keys and tokens were found across 100k Github projects. While these keys in most cases didn’t last more than 2 weeks, the damage was already done. Bots on Github scan and search for these keys, and within an hour the account they are tied to gets hacked. It takes at least a day to find out and recover.

In the end, once a data breach or compute-jacking incident has begun, it’s an incident, and some data and money are already lost. But with proper configuration hygiene and relevant alerts or actions set up, you get a view into what might be wrong early enough to prevent issues. Hence in this blog, we will examine:

- A sample data breach scenario (the compute-jacking scenario will be another blog)

- Common misconfigurations associated with it

- How to alert and ensure information is surfaced for preventive measures.

While alerting is fairly easy if the right tools are in place, the harder problem is correlating the various issues into a potential larger threat. Many a times we tend to look at cloud objects and their configurations in silo, without taking into context other objects and services that are impacted. These approaches tend to overwhelm SOC analysts with noise. This is where VMware Secure State helps. We will show how it can not only alert but also help analyze the situation and inter-relate objects and issues.

Sample Data Breach Scenario

Let’s assume we have ABC Co, that is heavily using AWS with a large footprint. Its using EC2, Lambda, S3, RDS, EKS, and other popular services. They are also using IAM significantly with integrations back to AD on prem to manage authorization on resources etc. However, because of the large footprint they have a significant proliferation of AWS IAM:

- roles

- policies

- Users

- groups

The roles are associated not only to users but also to services (AWS) and deployed applications. It’s the “ephemeral” nature of the applications and services that makes managing roles and associations harder. For example, an EC2 instance can be generated with access to RDS enabling administrators to access and manage the RDS tables and data. However, at the end of the project the EC2 instance might still remain as well as the policies attached to the EC2 instance. This becomes a security hole for RDS.

The association of roles with particular policies is probably one of the harder issues to pinpoint and clean up in avoiding a data breach. We will highlight this in the issue ABC Co faced.

ABC Co, stored a significant amount of user data for its e-commerce app on S3. Mostly for archiving and backup from various databases like RDS. It also had several instances of EC2 that were configured with READ access to S3. It allows administrators to access and manage the S3 buckets as needed. They have also ensured that only specific IP address port ranges could SSH into the machine.

However, they misconfigured the firewall by allowing IP port range for port 22 SSH access.

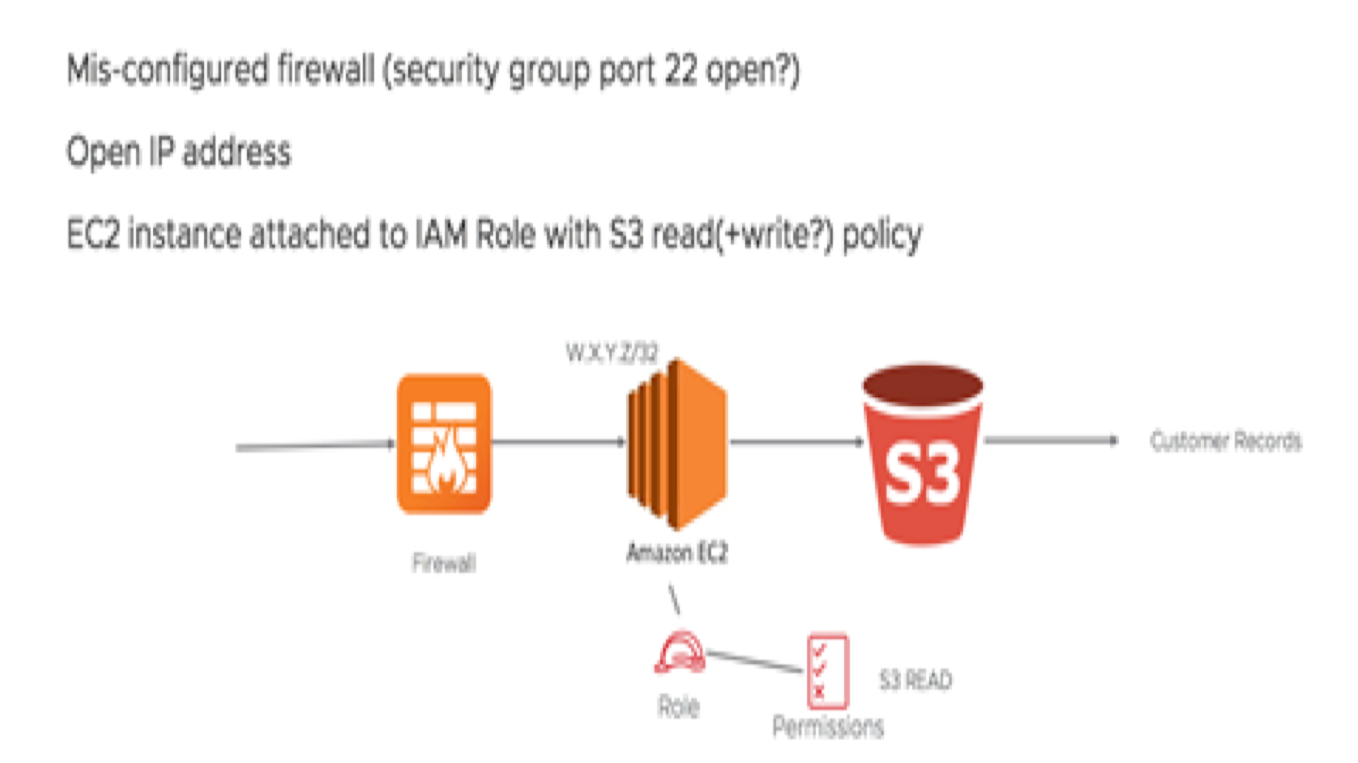

Here is the diagram of the configuration:

Let’s review the configuration issues:

- IP address range was mis-configured allowing non-company IP addresses to SSH in.

- SSH was turned on (port 22) - should not be allowed directly from any IP range vs a known entity (singular trusted EC2 instance)

- The EC2 instance had an S3 read role (AWS policy AmazonS3ReadOnlyAccess) - not a recommended configuration since it allows anyone with access to the machine to access S3

- CLI loaded on the machine

- S3 didn’t have proper ACLs configured (i.e. nothing)

- S3 didn’t have a policy restricting access to only authorized users/roles (i.e. blank policy)

- S3 wasn’t encrypted (this wouldn’t have mattered in this situation but its a generally good policy to have it)

At some future time a hacker:

- accessed the EC2 instance on port 22 (from a non-company IP address that was in the IP address range)

- utilized http://169.254.169.254/latest/meta-data/ on the EC2 instance to get role and credentials information that is available to the EC2 instance

- used the credentials to then proceed to scan S3 buckets from the EC2 instance

- After noting what was of value, then proceeded to exfiltrate the data from the open S3 buckets from a local machine. (Because the role had read access and no other policies/ACLs were configured, even an encrypted S3 bucket wouldn’t have helped because the data would be decrypted for “authorized access”)

ABC Co, lost a significant amount of user records (millions).

Common misconfigurations leading to Data breaches:

While we highlighted some of the misconfigurations ABC Co made, there are many more that can be configured. Here are some examples. There are two general types (obvious and complex)

Obvious misconfigurations:

1. S3 bucket is publicly accessible and unrestricted or FULL access to Authenticated Users.

2. S3 bucket encrypted enabled

3. S3 bucket ACLs are mis-configured

4. S3 object (the data) ACLs are mis-configured

5. IAM policies that might override the ACLs

6. IAM policies are simply not configured

7. pre-signed URLs existing

More complex misconfigurations

1. EC2 instance with access rights to the S3 bucket has port 22 open for public access

2. S3 READ and/or admin policy is attached to a role that is provided to numerous objects (i.e. EC2 or even a user)

3. Security groups mis-configure the allowable IP address range that can access EC2, or other objects which might allow access to S3

4. A shared key exists allowing other users (internally or externally) from “securely” accessing EC2 (which might have access to S3) etc.

The purpose of highlighting potential mis-configuration is to provide an appreciation for the large number of combinations that could cause breaches from S3. Couple this with misconfiguration on other elements, and the combinations become astronomical. With most companies building and deploying a number of applications into public cloud, enforcing simple configuration standards becomes and increasingly hard problem because each team can have unique and valid configuration differences that don’t pose the same risk to the organization. So while no one is ever going to pinpoint the perfect list of what to configure, here are some general recommended baselines:

-

Enable cloud services like AWS Cloud Trail, AWS VPC Flow Logs and GuardDuty at the least within all the regions and accounts. These services together provide logging and baseline behavior analysis for users and instance activity.

-

Permission boundaries must be set for every user. It controls the maximum permissions that a user can have. This helps prevent privileged escalation. This was used in the data breach example mentioned above.

-

MFA must be enabled for root account as well as all the users who have console access.

-

For data services like S3, access to buckets should be restricted to only those users who require it. AWS recommends using IAM policies or S3 Bucket Policies to restrict access.

-

Enable logging on specific services and resources Eg. S3 logging, VPC logging.

To address these configuration risks and more, organizations need to deploy a Continuous Verification System that is able to target not only the best practices but the specific controls for each of their cloud accounts and applications.

How can you detect this scenario?

As we mentioned earlier in the blog, while there are no guarantees that an account will never be breached, setting up proper alerts and remediation actions will certainly help you minimize the overall risk of security breaches.

VMware Secure State, a SaaS service that is part of our multi-cloud solution portfolio led by CloudHealth, allows more robust and proactive security in public clouds. VMware Secure State uses an integrated security approach that is DevOps friendly, and alerts users on configuration issues based on custom rules as well as violations from compliance frameworks such as CIS, NIST, HIPAA, PCI and GDPR. The service also provides an ability to inspect interconnections between objects and find vulnerabilities across a concert of configurations services (interconnected service risks).

If ABC Co, would have used VMware Secure State (VSS from here in), they would have been able to see the following:

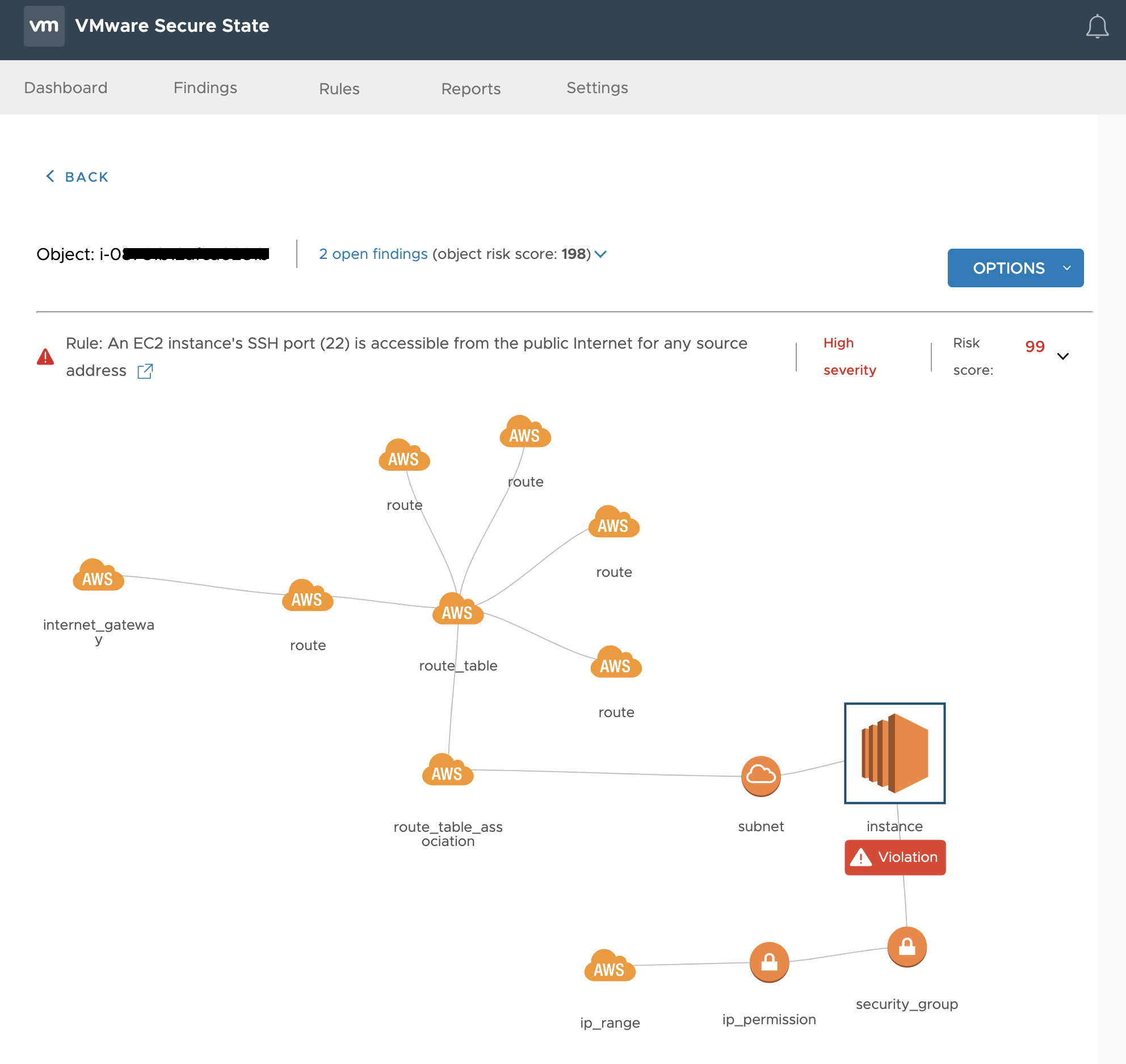

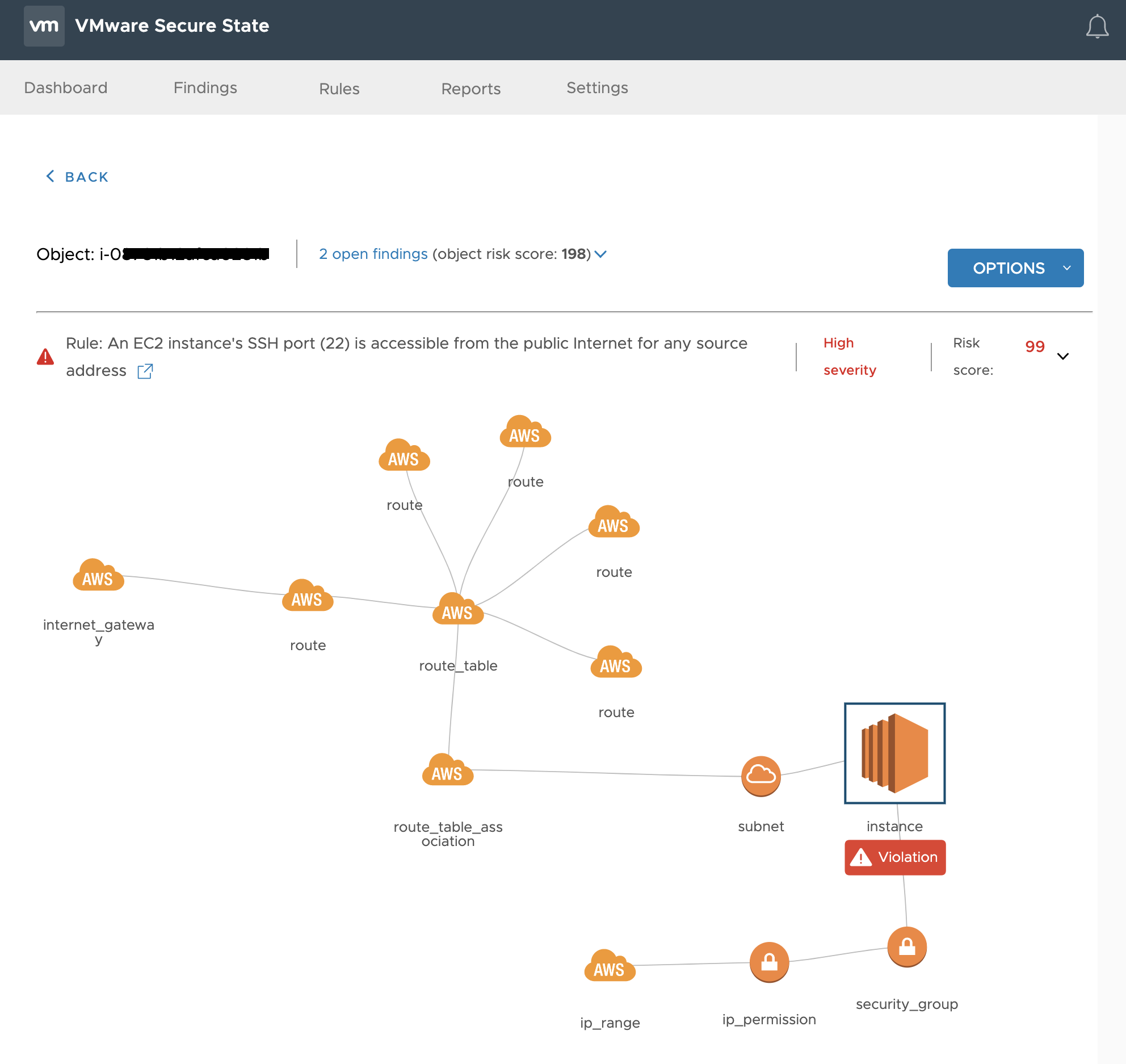

1. VSS would have detected and alerted that EC2 instance has port 22 open for public access – (https://kb.securestate.vmware.com/public-instance-world-open-TCP-22.html)

The diagram above shows how VSS pinpoints the precise issue against the instance, and the interconnected objects like subnets, route tables, etc.

Instead of opening port 22 on application instances, the best practice would be to use a jumpbox (Mgmt box) and restrict SSH access to only known IP addresses and users. The jumpbox can then be used to SSH into other EC2 instances. Ensure that Public IP addresses are assigned to only those EC2 instances which actually need it.

There are other services like AWS System Manager which can be used to manage SSH access without using Jumpbox but it has some limitations.

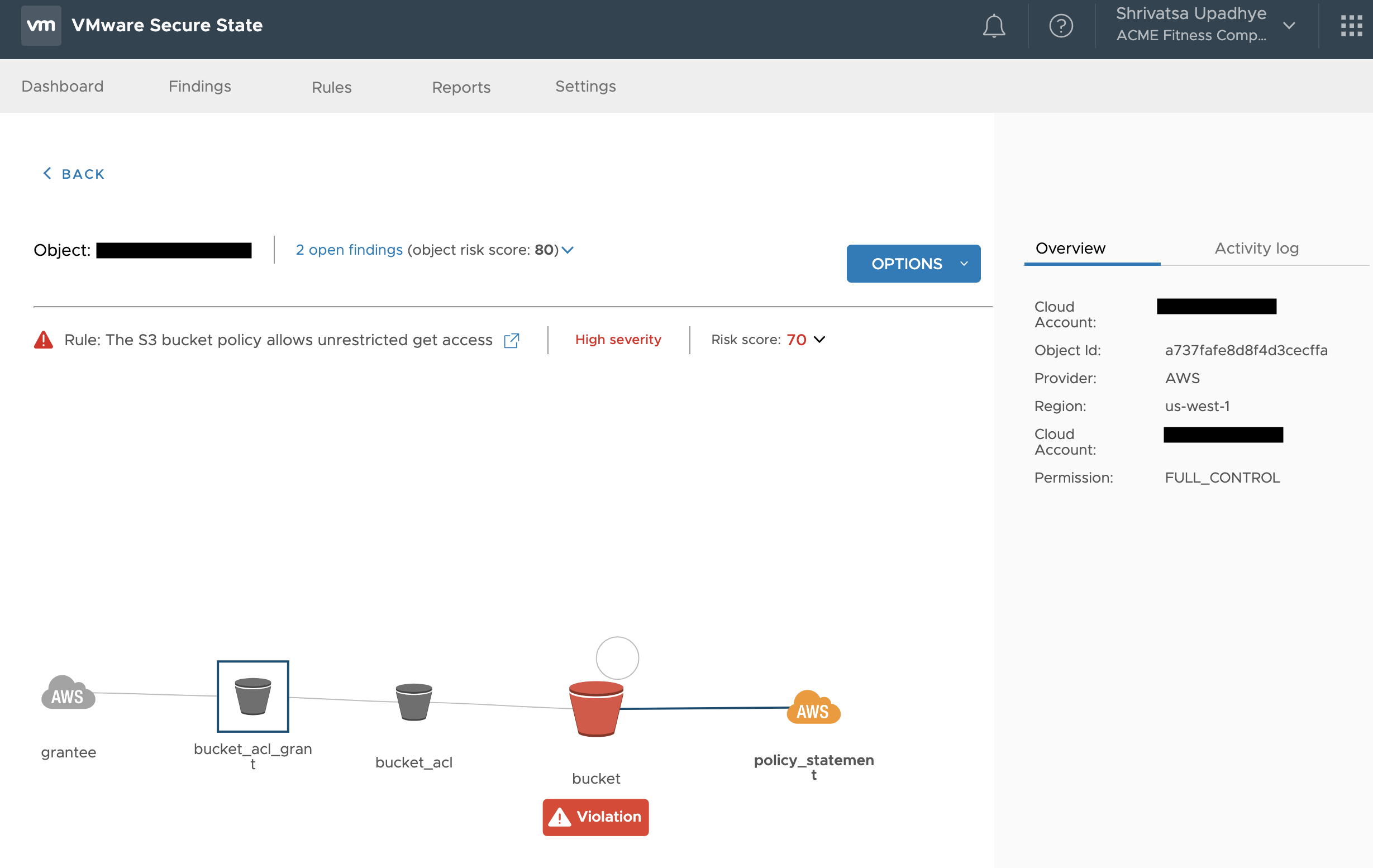

2. VSS would have identified that S3 bucket has unrestricted or FULL access to Authenticated Users. Since in this breach, the criminal actually used credentials to get the data. – (https://kb.securestate.vmware.com/s3-authenticatedusers-access.html)

This VSS violation chain, shows the violation on S3 bucket and the policy which gives “Authenticated” users the permission to Fully control the S3 bucket (Permission: FULL_CONTROL). This allows them to modify ACLs, read/write/delete the object or the bucket itself.

The access should be restricted to S3 buckets even if they are authenticated users.

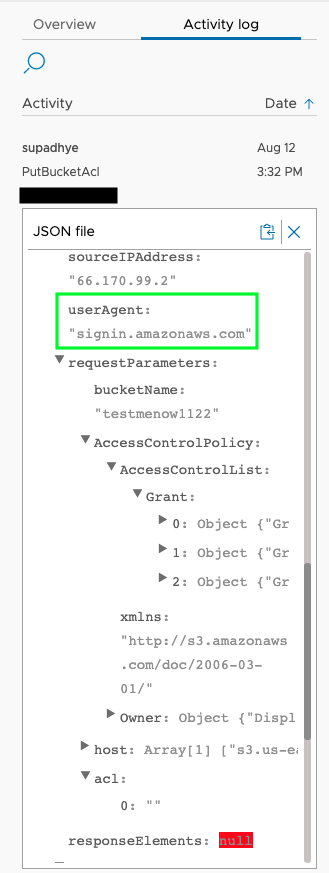

Apart from this, VSS can also show activity log on the object. Here, the admin can use it to narrow down the actions that were performed. The activity log can detect whether the user modified the resource by using Access Keys along with CloudFormation / Terraform / other SDK or if they used AWS console. This helps in troubleshooting the kind of credentials that were compromised, user login credentials or keys.

The activity log also provides detailed information about the changes that were made. As you can see above, the user seems to have updated the ACLs for the S3 bucket.

3. In ABC Co. incident, shared SSH key was used across different EC2 instances. This could have been easily detected by VSS because of the ability to build “interconnected graph model” of the customer’s environment across different resources. https://kb.securestate.vmware.com/administrative-policy-exposed-by-connected-ssh-credential.html

The power of VMware Secure State’s Interconnected Cloud Security Model (ICSM) can be seen here, where it detects related violations across various services. In this example, we are looking at EC2 and IAM services.

A publicly routable and addressable instance has the same ssh key as an instance with administrative policy. This complex violation is comprised of disparate violations:

-

Instance 1 is publicly routable and accessible.

-

Instance 2 is private but has an IAM attached to it with “admin” policy. (With this access the instance can perform any action on the account)

-

Instance 1 and 2 share the same ssh-key.

In this scenario, the user, who has the SSH-Key but NO privileged access, can ssh into the public instance (Instance 1) and then access the instance with admin privileges (Instance 2) and potentially make any number of changes within the account. So even if you had logging enabled, the logs would show that the “instance” was the one which made changes with no trace of the user.

This violation was exploited as part of the data breach in the example mentioned above.

4. VSS would have alerted that Encryption keys from KMS service were not used or not enabled within this account – (https://kb.securestate.vmware.com/kms-unused.html)

5. VSS would have detected and alerted within seconds that S3 bucket configurations (ACLs) were being modified by authenticated users (One could potentially modify the S3 configurations before or after copying the data) – (https://kb.securestate.vmware.com/s3-authenticatedusers-write-acp.html). Near real-time visibility is possible because of the architectural decisions made within VSS.

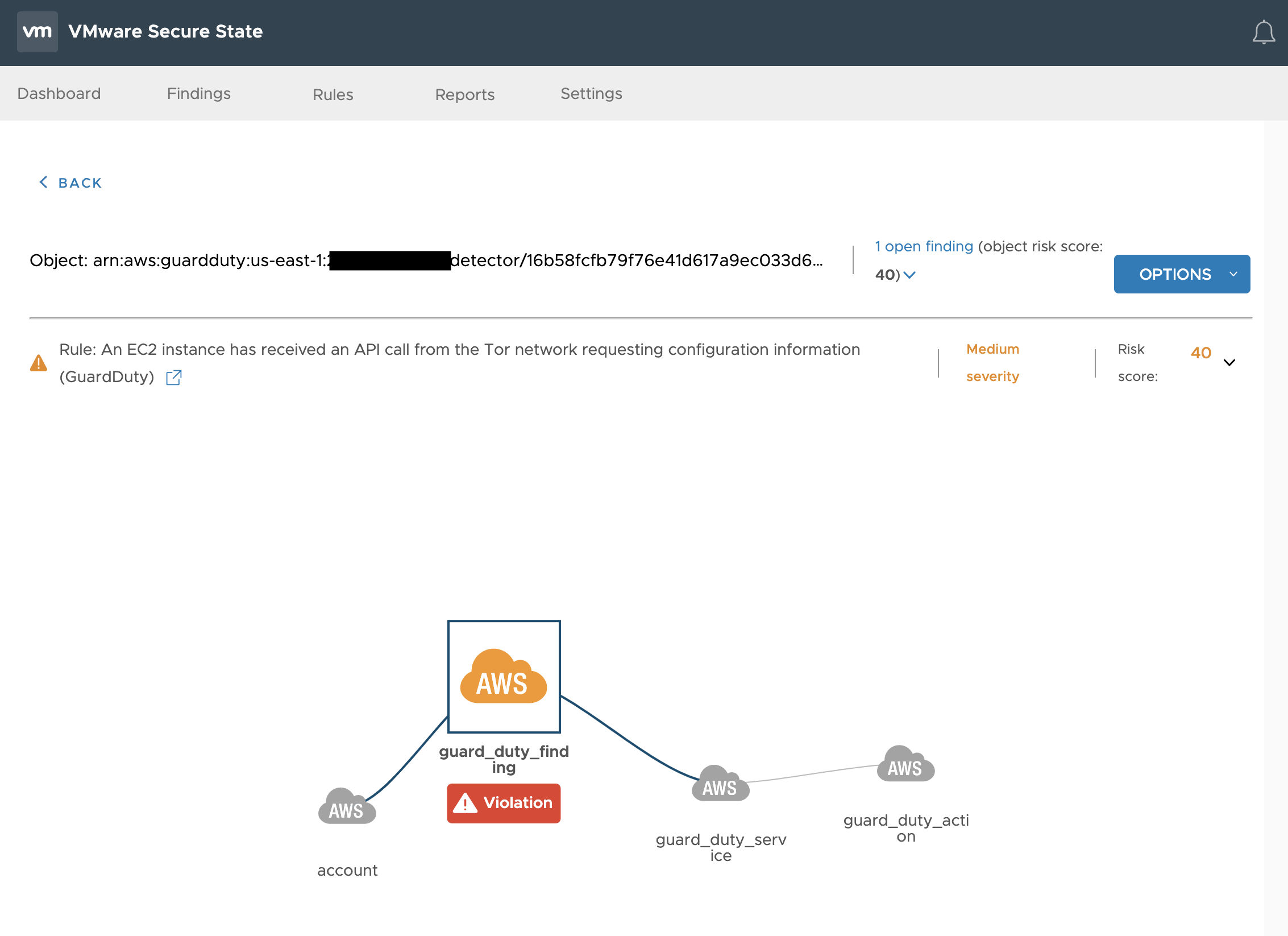

6. VSS and AWS Guard Duty Integration. VSS is not meant to replicate ALL possible security tools – rather its meant to help bring in data and tag it appropriately to the objects and the situation. Hence you should turn on Guard Duty in AWS, and VSS will take in Guard Duty alerts and process them. Here are some examples:

a. VSS can alert the user about API calls from a TOR network https://kb.securestate.vmware.com/aws-guardduty-tor-ip-caller-unauthorized-iamuser.html

b. VSS can alert based on GuardDuty events that the IAM credentials were used for API access from a TOR network https://kb.securestate.vmware.com/aws-guardduty-tor-ip-caller-recon-iamuser.html

7. Identify any and ALL roles that have “AmazonS3FullAccess” or “AmazonS3ReadOnlyAccess” for S3

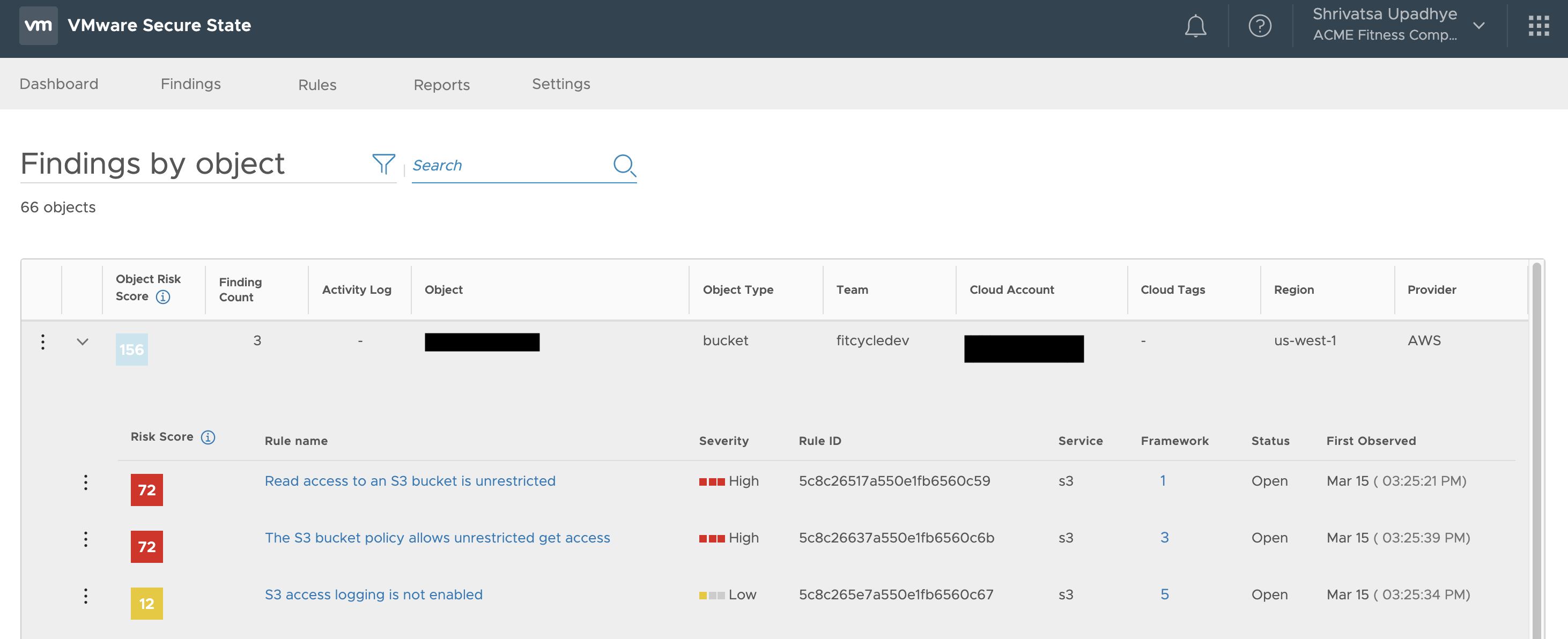

8. Today we have the ability to identify and alert on ALL the violations with respect to a particular object with the pivot view.

Below, you can see how the pivot view provides all the related violations for an object. In this case, we are looking at all the different violations associated with a particular S3 bucket which include Unrestricted read access, unrestricted ACL modification access and no logging enabled.

The connected model of customers environment can be queried by using a feature in VSS called “Explore”. This allows the user to discover and initialize objects within the cloud account and find their related properties along with other dependent resources. It can be very useful in hunting down the objects that might be impacted in case of a compromise.

As you can see in the several examples laid out on detecting some key issues VSS would have detected for ABC Co to prevent and avoid the issue. However as we detailed above, its not just the alerts, but also the ability for VSS to provide the interconnected graph that allows for better interspection in all cases. This “context” is more valuable than a simple “list of alerts” that most general security tools provide.

Conclusion:

As we’ve outlined. Using a tool like VSS, abc co would have been alerted to the following issues:

- SSH was turned on (port 22)

- The EC2 instance had an S3 read role (AWS policy AmazonS3ReadOnlyAccess) - not a recommended configuration since it allows anyone with access to the machine to access S3

- S3 didn’t have proper ACLs configured (i.e. nothing)

- S3 had READ and/or WRITE access

- S3 wasn’t encrypted (this wouldn’t have mattered in this situation but its a generally good policy to have it)

This with a rigorous process of analysis and acting on these VSS recommendations would have prevented the data breach. In addition, VSS would have alerted when the actual breach occurred through Guard Duty integration. For more information about VMware Secure State please go to: https://go.cloudhealthtech.com/vmware-secure-state